Whack the Whack-A-Mole

The AI Act offers a chance to ban a dangerous type of program that keeps popping up

You’re sitting at the bar between your drunk friend and the door, making the case he should hand over the keys, accept a ride, and sleep on your sofa. I’ve been thinking about how to do that with Steve (pictured below) as he gets ready to do something stupid. But guys, we have this problem every other week. If it’s not Steve, it’s someone else. We should really be thinking about how to change the pattern.

The European Council is preparing to carve the usual security exemptions out of the European Parliament’s draft of the AI Act, which proposes to limit dangerous tech much more than the preceding (Commission) version did. (The Council is EU heads of state, the European Council President, and the European Commission President.) One path to potentially convincing the Council to keep the Parliament’s proposed bans on emotion-recognition AI, real-time biometrics and predictive policing in public spaces, and social scoring is to explain the implications of probability theory for this type of program. These are all mass security screenings for low-prevalence problems.

The mathematical structure of these programs is the same, and the AI Act offers a chance to ban them as a category. A sensible legal regime would regulate this dangerous structure instead of continuing to play Whack-A-Mole with these stupid programs — like European Commission Horizon 2020-funded bullshit AI “lie detector” iBorderCtrl, U.S. Department of Homeland Security social media scoring program Night Fury, and proposed EU digital communications scanning program Chat Control and UK analogue Online Safety Bill. But this is not currently being discussed, in part because the larger pattern is not being widely identified as such, and in part because three cognitive biases contribute to widespread misunderstanding of this type of program: (1) dichotomania, (2) base rate blindness, and (3) reification. Correcting these distortions shows this type of program is dumb.

Why Mass Security Screenings for Low-Prevalence Problems Are Dumb

(1) Dichotomania

Leading statistics reformer and UCLA epidemiology/statistics professor emeritus Sander Greenland defines dichotomania as “the compulsion to perceive quantities as dichotomous even when dichotomization is unnecessary and misleading.” There are two typical ways of representing outcome estimates for this type of program, and they both suffer from dichotomania. Better outcome estimates involve frequency format confusion matrices showing misses and hits in terms of false and true positives and negatives; they’re better because people can make better statistical inferences from them according to risk communication pioneer Gerd Gigerenzer. By contrast, worse representations cite only single accuracy figures, attending only to the true positive category — telling only part of the whole accuracy-error story — and put these figures in probability instead of frequency format, leading people to make worse inferences from them.

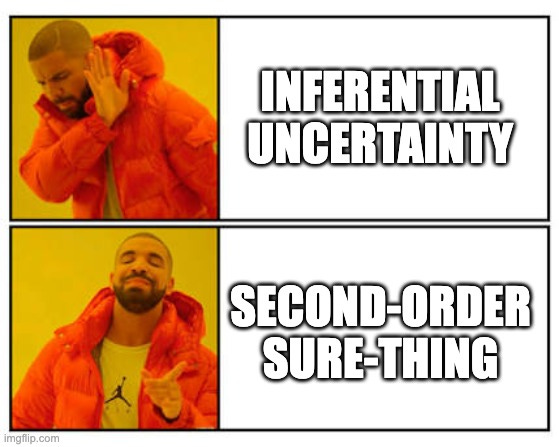

Both representations stylize away uncertainty. But inferential uncertainty in the security realm has tremendous practical implications that policymakers need to think about. This post focuses on them.

Briefly on the other two cognitive biases distorting general understanding of this type of program…

(2) Base rate blindness

Base rate blindness (aka the base rate fallacy, base rate bias, base rate neglect, and the prosecutor’s or defense attorney’s fallacy) is when people ignore the base rate, or rate of occurrence (incidence, prevalence) of the thing of concern, like a crime or disease. This post explained how probability theory (Bayes’ Rule) implies that even highly accurate mass screenings will generate very large numbers of bad leads, or false positives, which may itself have considerable implications for security as well as liberty.

It’s worth highlighting again that the math here means that increasing one type of accuracy comes at the expense of decreasing another. So a single accuracy figure only ever tells a fraction of the whole story, an under-recognized fact in current real-world uses of these programs. For example, in her fieldwork with the Los Angeles Police Department on Palantir’s predictive policing AI Gotham, University of Texas at Austin Assistant Professor of Sociology Sara Brayne witnessed a Palantir engineer helping police conduct a search in apparent ignorance of the statistical (and thus practical) meaning of the results:

To make sense of Palantir Gotham’s data, police often need input from engineers, some of whom are provided by Palantir. At one point in her research, Brayne watched a Palantir engineer search 140 million records for a hypothetical man of average build driving a black four-door sedan. The engineer narrowed those results to 2 million records, then to 160,000, and finally to 13 people, before checking which of those people had arrests on their records. At various points in the search, he made assumptions that could easily throw off the result — that the car was likely made between 2002 and 2005, that the man was heavy-set. Brayne asked what happened if the system served up a false positive. “I don’t know,” the engineer replied.

This type of ignorance of the implications of probability theory in mass security screenings for low-prevalence problems poses real and present threats to justice and security globally. The Palantir flagship product Gotham, developed in its longstanding intelligence community collaboration, has been used as a predictive policing system in places like LA, where it’s been widely criticized for techwashing racism. Like many American military-industrial technologies, it has been exported to Europe.

For instance, data gathered by Gotham have been used by the European police agency Europol. In Denmark, the POL-INTEL project — based on Gotham and in use since 2017 — appears to include data on whether people are citizens and non-Western to produce a crime heat map. And in Germany, Palantir’s Hessendata has been in operation in the Hessian police force since 2017.

The German example is troubling for what it suggests about the enforceability of the law under conditions of nontransparency. Hessen is a central southwestern German state in which several police officers were recently investigated for alleged far-right links. This February, the German Constitutional Court ruled the regulations governing the current use of the program were unconstitutional, demanding improvements by September through a constitutional amendment that the legislature in the state of Hesse can make. The amendment should better specify the cases in which the tool may be used, and how long the data can be stored.

That’s a good-faith move toward rule of law. But descriptions of what Hessendata is and does do not seem to match what Gotham is and does, even though Hessendata is based on Gotham. Telling courts “this is Google for police” doesn’t cut it. And independent researchers like me can’t get access to relevant data to question what is going on here beyond saying maybe it doesn’t add up, and we would like more transparency please.

At the broader level, this is also about why using protected categories like race and nationality in some decision-making processes (including with AI) is bad, and in others is good. A future post will explore that. Suffice it to say for now that common cognitive biases plus punitive intent in the security realm make Palantir’s use of such categories in programs like Gotham and POL-INTEL bad, and we should wonder what is really going on with programs like Hessendata for the same reasons. There are serious concerns here about rule of law — including concerns that these programs may accidentally undermine security — as well as discrimination. Police and the courts should work with independent researchers including methodologists to audit what programs like this actually entail. There are a lot of applicable insights here.

(3) Reification

Moving back up to the broad outline of the main argument against this type of program, Greenland defines “statistical reification” as “treating hypothetical data distributions and statistical models as if they reflect known physical laws rather than speculative assumptions for thought experiments.” In this context, the common mistake is treating hypothetical estimated outcomes of this type of program (numerical outcome estimates) as if they map onto different kinds of practical effects (values). That mapping fits the rhetorical logic (which reflects a logical mistake) that such programs offer security benefits in exchange for liberty costs.

The logical mistake here is to think that (at the distortion’s most logical iteration of several possibilities) the costs of mass surveillance to estimated false positives plus true positives represents a net liberty cost to society that we can choose to trade for a net security benefit that consists of the true positives minus the false negatives. But these values do not neatly map onto these matrix outcomes in that or any other way. The next post goes deeper into this widespread type of theoretical-practical mapping mistake — which is itself a huge problem across the sciences — and its instantiation in the false “liberty versus security” opposition often used to talk about this type of program’s societal implications.

Politics: Playing Whack-A-Mole Is Dumb

So mass security screenings for low-prevalence problems are alike in structure, common cognitive biases distort general understanding of how incredibly stupid and dangerous they are for society, and, accordingly, there has been an ongoing game of Whack-A-Mole for decades. People who oppose such programs focus mostly on fighting individual programs on their respective subject area terrains. New programs like this keep popping up, and people who care about the specific terrain on which they would rain down destruction then fight them there.

This is bad strategy. It divides and conquers finite subject-area expert, scientist (qua generalist, methodologist), activist, and other stakeholder resources. The wiser alternative would be to instead recognize that opponents of each of these individually stupid programs have a collective interest in banning the type.

In my experience, people often dislike it when you tell them they have a collective action problem with another group they don’t consider theirs (e.g., multiple sclerosis patient advocacy groups and lupus/other autoimmune disease patient advocacy groups). Dealing with this reaction is not my department. I just point out this strategy is a bad move.

It also misses the key empirical point: Much as we may wish to escape math and logic, basing policy on such wishful thinking is a very bad idea (TM).

So this is a hopeful set of posts about how science communication might serve democracy, human rights, and security all-around. It begins (if you can still call this a beginning) by asking why we don’t just want to use what might seem to be the best tools we have to fight crime. After all, isn’t something like 80% accuracy at least way better than nothing?

Isn’t Using Highly Accurate Mass Screenings to Catch the Baddies A Great Idea?

No; yes.

No: Under conditions of inferential uncertainty, it is difficult or impossible to validate results. Then you aren’t sure what you’re trading off. You can’t justify one trade by pretending it’s another one that’s not actually on the table. Especially not in the security realm. This is about existential threat. We need to deal in reality as much as possible. We would really like to get this right.

Yes: When there is a second-order sure-thing test, or set of tests, to sort out true and false negatives and positives, then mass screenings for low-prevalence problems often make sense. Especially in the health realm, where preventive medicine may save and help people lead better lives. This is why we had to let strangers feel up our backs every so often when I went to elementary school in Alabama; I guess identifying scoliosis earlier is better, and the only harm done was my acute embarrassment. Screening all pregnant women for STDs like HIV similarly makes sense and is common practice globally, even where the problem is super rare, because you can definitively sort out the false positives from the true positives in the second step. (Which is an especially good thing, because COVID-19 may have ramped up those false positives — it shares a spike protein similarity with HIV.)

Why Does Inferential Uncertainty Make These Screenings Worse Than Nothing?

Conditions of persistent inferential uncertainty play out in three ways that can be seen as distinct reasons why even highly accurate mass screenings for low-prevalence problems turn out to be way worse than doing nothing at all (which is not even the real point of contrast — we have better tools). Those three reasons are (1) ignorance, (2) lies, and (3) subversives.

(1) Ignorance

By ignorance, and really by inferential uncertainty in the first place, I mean that it’s hard to know for sure what the outcomes of mass security screenings really are. For example, in the lab, you know who the thief was when you run a mock crime “lie detection” study. But in the real world, you often don’t have an independent way of confirming whether someone is telling the truth or lying; that’s the problem in the first place.

There is no “get out of uncertainty free” card. Scientists struggle with this just like everybody else, and a lot of brilliant things have been written about it by leading methodologists that I’m not referencing here to keep it short (see, e.g., this and its references). Science does not solve the problem that consciousness is non-transferrable, and that sucks for us when we want to know what’s really going on with other people. We want to winnow away that uncertainty, and it may feel as though highly accurate mass screenings offer that chance. But that perception is a product of dichotomania and base rate blindness.

(2) Lies

Second, people lie. Again, that’s the problem in the first place. Law enforcement want to prevent, detect, and punish crime. Criminals want to beat them at it. This generates things like lying, evasive behavior, and threats; good policing makes perverse incentives for more bad behavior. That’s not a reason we shouldn’t have law enforcement. It’s just a structural fact. More on this below.

But criminals aren’t the only ones who respond to perverse incentives with bad behavior. Researchers do, too. High purported accuracy rates for mass security screenings like “lie detection” programs and Chat Control are lies. Call them mathematical representations of highly stylized models reflecting unrealistic simplifying assumptions if you wish. The bottom line is that AI is usually trained on samples that look very different from the broader population for which they’re intended. This and other facets of artificiality create a typical pattern of vastly inflated accuracy rates that tank when they move from the lab to the field. When you hear an AI accuracy figure, listen nicely and subtract 10% to be generous.

This pattern is especially problematic when field validation is impossible, as in the case of “lie detection”: If you expect accuracy to drop from 80% in the lab to 70% in the real world, but you can’t validate field accuracy, then you don’t know how well the tool actually does in the real world and will never find out. So high accuracy rates that proponents often ascribed to mass security screening tools for low-prevalence problems actually contain, at best, a number of uncertainties regarding error, bias, and limited generalizability that are not reflected in these figures.

We can and should still use hypothetical accuracy rates to look at these tools and their implications for society. But we must remember that they are not real. They are stories. Some numbers are measurements and others are metaphors; these are the latter.

This gets into the reification problem the next post focuses on. We need to make the leap from math to reality, to be sure we know what we’re really talking about. But we need to make it better.

(3) Subversives

Third, mass security screenings for low-prevalence problems don’t happen in a societal vacuum. They happen in dynamic social systems with structural incentives. People respond to those incentives. So people with an incentive to avoid detection through mass security screenings are likely to respond to that incentive by avoiding that detection, or at least trying, at least some of the time. That will tend to decrease field accuracy more by increasing false negatives.

That means we don’t really know how accurate these tools are under real-world conditions, researchers respond to perverse incentives to inflate and otherwise misrepresent accuracy, and criminals respond to incentives to subvert these tools — lowering real-world accuracy to an unknown degree in what are probably the subset of cases we care most about (dedicated attackers). This is bad, and AI may worsen all three of these problems. I once wrote a dissertation about how technology-mediated decisions may look neutral and sciencey, but sometimes really aren’t (don’t blame me, I was young and I needed the money). While I have aged a decade, this problem persists.

Trust Is A Telephone Game and AI Is Still Playing It

Governments, corporations, and other powerful entities want to increase their accuracy in detecting something bad. Tech seems like a great way to do that — new, hip, sciencey, and cost-saving. So again, why not use all the tools? If algorithmic decision-making increases accuracy and efficiency, why limit its uses?

Because nontransparency compounds the problems of ignorance, lies, and subversives. The imagined net accuracy and efficiency gains may or may not exist. The base rate fallacy suggests that they probably don’t — not when we’re talking about mass screenings for low-prevalence problems. Rather, these programs wreak havoc on society instead through false positives, false negatives, and opportunity costs. But the nontransparency problem means that we’ll never find out the true extent of the damage they do.

(1) Ignorance.

The black-box nature of the machine learning algorithms typical of AI compounds the validation problem. Our real-world inferential uncertainty does not go away just because a machine gives a result. Instead, new technological links in the inferential chain introduce new opportunities for bias and error. Worse, it’s very difficult to envision a way to check in advance for the many forms of bias and error that AI could generate. To paraphrase Tolstoy, all happy algorithms are alike; each unhappy algorithm is unhappy in its own way.

That’s why the myth that AI can be objective is dangerous. The myth is that tech gets decisions closer to some Platonic truth, because it takes human interpretation out of the analytical loop, at least temporarily, thus avoiding or minimizing the possibilities of bias and error. The reality is that, instead, AI may pack the biases of the humans who made it, the sample it was trained on, and more, into nontransparent decisions that add layers of interpretive choices — layers just like old-fashioned decision-making processes (including statistical analyses) have always had. In fact, AI may add (net) a layer or two to the telephone game of perception and interpretation, instead of reducing those layers. But without a paper trail — a way to measure the resulting distortion and its real-world effects — a trail we don’t have in part because of inferenetial uncertainty — we have no way of actually knowing for sure whether or to what extent that’s a problem.

That’s why the first (Commission) draft of the AI Act classified as high risk things like the UK’s recent embrace of AI welfare benefits fraud detection, stating:

Natural persons applying for or receiving public assistance benefits and services from public authorities are typically dependent on those benefits and services and in a vulnerable position in relation to the responsible authorities. If AI systems are used for determining whether such benefits and services should be denied, reduced, revoked or reclaimed by authorities, they may have a significant impact on persons’ livelihood and may infringe their fundamental rights, such as the right to social protection, nondiscrimination, human dignity or an effective remedy. Those systems should therefore be classified as high-risk. Nonetheless, this Regulation should not hamper the development and use of innovative approaches in the public administration, which would stand to benefit from a wider use of compliant and safe AI systems, provided that those systems do not entail a high risk to legal and natural persons. — Excerpted from paragraph 37, p. 27.

This is not a ban. In part, that is because it’s from the Commission version; it’s the Parliament version that listened to civil society groups and drew what Thomas Metzinger might call meaningful red lines for industry. Democracy is cool.

It’s also in part because the larger structure of the AI Act is a pyramid with (1) unacceptable risk tech (banned) up top, followed by (2) high risk (permitted with certain requirements including assessment before deployment), (3) AI with specific transparency obligations, and (4) minimal or no risk (no requirements) (European Commission, “Shaping Europe’s Digital Future,” p. 7).

Whatever version ultimately passes, this approach stands in sharp contrast to increasing, unregulated worldwide use of AI, including higher-risk types. In the UK benefits case, the government recently extended its use of machine learning to detect potential fraud and error in welfare — a move Big Brother Watch UK criticized due to the nontransparency of the models, saying this may lead to unchecked bias. This type of development situates the AI Act as a pendulum swing back toward rule of law that new tech may otherwise threaten. Rule of law requires consistency (the same rules for everyone), but AI’s nontransparency compounds our inferential uncertainty such that we can’t know if the same rules are applying to everyone, or not.

(2) Lies and (3) Subversion

Nontransparency also compounds the problems of researchers lying about accuracy and criminals subverting mass screening. As a recent joint statement from 300+ scientists warns, scanning AI — like that proposed for mass digital surveillance in Chat Control — generates unacceptable numbers of false positives and false negatives, and is vulnerable to attacks. But it’s hard to prove how the numbers shake out, and what the net consequences would be of dedicated attackers gaming these systems.

This is not to say that ignorance, lies, or subversion are anything new… Surely we just want to make relative net gains using better tech, and AI can help us do that?

Trapped in the Accuracy-Error Trade-Off

We are trying so hard to get things right with new tech that seems to offer the promise of doing better. This is a noble effort.

But there’s no one way to do better, in the sense that there are four outcomes to improve (true positives, false positives, true negatives, and false negatives), and improving accuracy trades off with worsening error. It’s my understanding this is sometimes called the signal detection problem, often represented in modern statistics with ROC (receiver operating characteristic) curves and less often with DET (detection error trade-off) graphs. And it’s older than the evolution of our eyes and ears.

No one is perfect at seeing or hearing, among other things. Sometimes, when there is nothing, we see or hear something, as “sensory noise yields genuinely felt conscious sensations even in the complete absence of stimulation,” according to University of California at San Diego psychologist John T. Wixted in “The Forgotten History of Signal Detection Theory.” Did false positives evolve as part of our perception because we have to trade off between accuracy and error? Is the accuracy-error trade-off a feature, not a bug? Can we really not escape it with AI?

I think probability theory implies that the accuracy-error trade-off is in us and with us for a reason. We live in a dynamic social ecosystem (the real world). At the individual level, consciousness researchers describe the nature of consciousness as a “controlled hallucination.” In that hallucination, “the noise distribution falls above, not below, the threshold of conscious awareness (i.e., neural noise generates genuinely felt positive sensations, not imaginary negative sensations)” (p. 15-16). Awareness is a metaphor for a facet of a consciousness that does not correspond one-to-one to empirical reality. Sometimes you see the lion coming for you, sometimes you miss it, and sometimes you flinch at the shadow of a flame. The accuracy-error trade-off isn’t just inescapable; it’s part of what has long kept us alive. When something has.

Finitude is a bitch. We are trapped in a real world of compromises with respect to these trade-offs. Seeing all the lions coming for you would mean being too jittery for normal tribe life — creating a security threat to the very (social) existence that your biological system evolved to protect. So we probably evolved to miscode some actually dangerous flickers or noises as nothing, since most of them are; and some nothing as something, since some nothing, isn’t.

It’s not just lions. According to probability theory, screening all the spies out of the National Labs would require getting rid of all the scientists. Pro tip: Having nuclear scientists is better for national security than not. The accuracy-error trade-off is inescapable.

Against this backdrop of the old, pre-human struggle to figure out what’s going on in the world around us without dying from too much paranoia or too many missed threats, we play out particular policy dramas embedded in complex modern societal conditions, expressed in theoretical numbers, and mapped onto value-laden words. Both the number and the word steps here introduce new opportunities for error (the reification distortion). Especially when people think that the words we use to stand in for values map neatly onto mathematical outcome estimates that map neatly onto real-world realities — when both of those translational steps are wrong. The next post explores why.